Perceptual AI-based Metrics (AZ Mate) Add-on

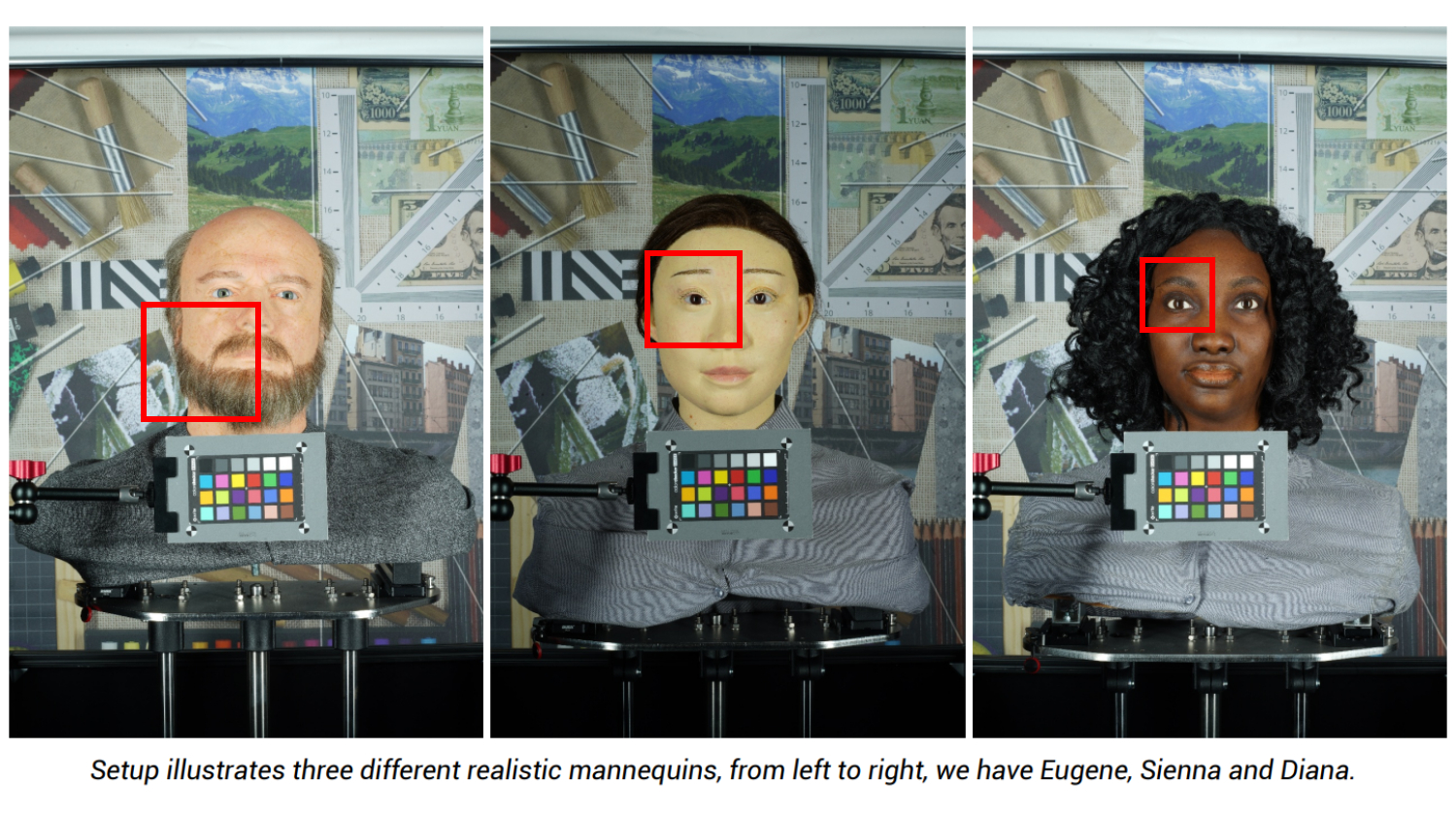

Detail Preservation Evaluation on realistic scene

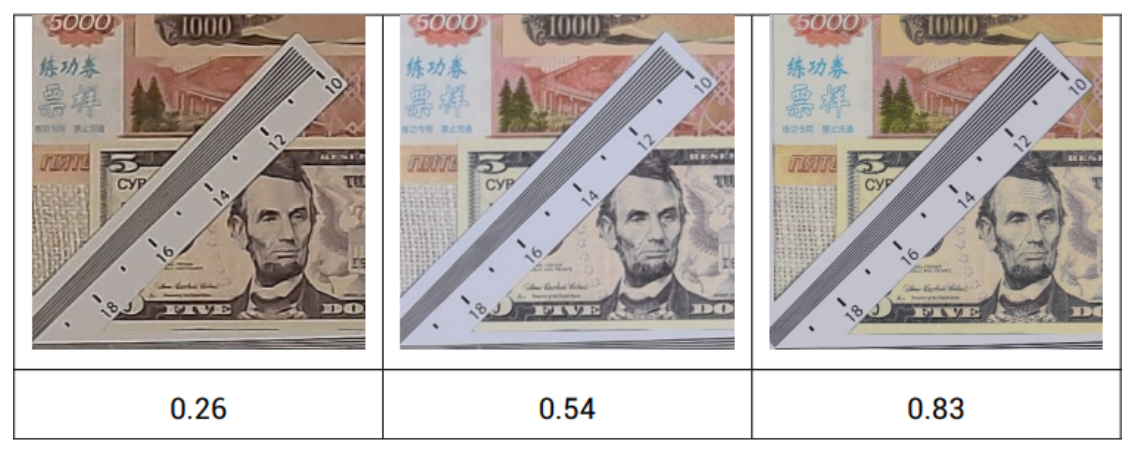

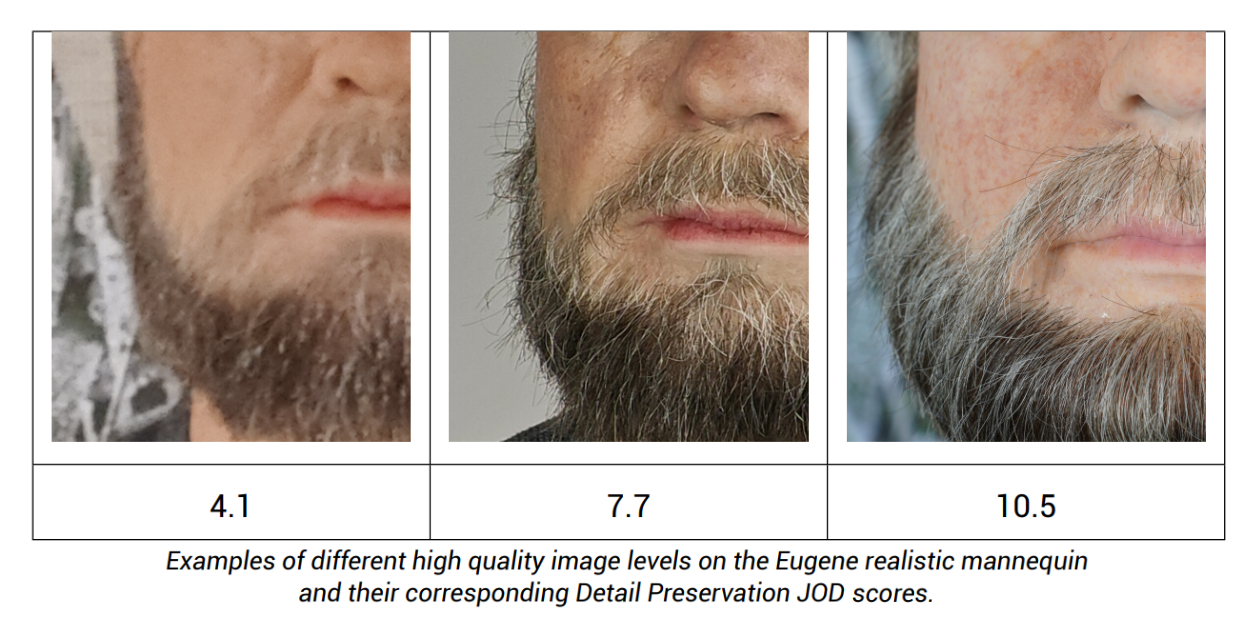

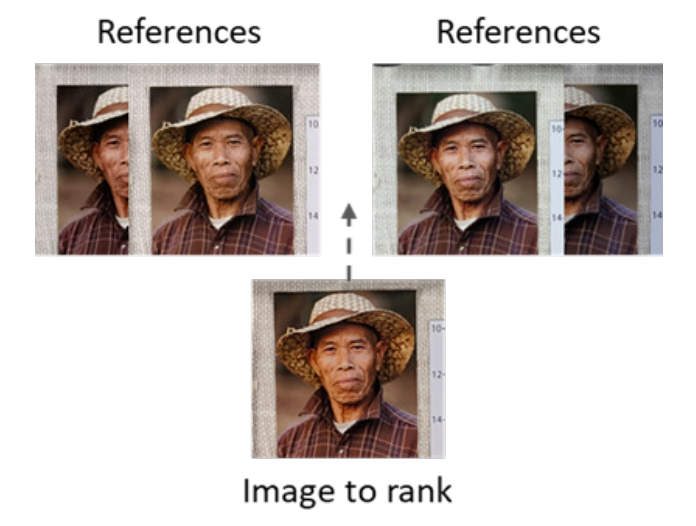

DXOMARK developed machine learning-based methods that estimate the perceptual image quality perceived by a human expert when evaluating pictures of known content. The detail preservation metric quantifies the capacity of a camera to produce sharp images precisely and pleasantly based on a trained dataset of the scene.

Key Highlights

- Photo & Video measurements

- Detail Preservation Metric

- Perceptual Noise Quality Metric

DSLR & Mirrorless

DSLR & Mirrorless  3D Camera

3D Camera  Drone & Action camera

Drone & Action camera