Challenges

- Unwanted optical flare from bright light sources such as the sun or artificial lights can reduce contrast, dynamic range, and image quality. Flare impacts automotive, mobile, drone, and AR/VR cameras, making it critical to measure and control.

- Existing methods are often inconsistent, subjective, or difficult to replicate, preventing accurate comparisons between camera designs, coatings, or optical layouts. Engineering teams require:

- A repeatable lab setup to generate controlled flare patterns

- Objective metrics correlated with perceptual impact

- Compatibility with automated workflow

Solutions

- Realistic light source simulation: Collimated light with size and color close to real-world sources, evaluating flare in and out of the field of view

- Wide angular coverage: Motorized source rotation from -160 to +160 degrees and multi-axis camera alignment for precise flare characterization

- Standards compliance and metrics: Share our methodology and computation with the standards committee to be at the heart of IEEE 2020 automotive standard and the upcoming ISO 18844-2

- Automation and integration: Supports automated acquisition, processing, and reporting with Python API control

- Broad applicability: Supports visible and NIR sources; compatible with other Analyzer tests, such as MTF

Results

- Precise quantification of flare intensity and distribution

- Repeatable, operator-independent results for design decisions and supplier benchmarking

- Actionable insights for optimizing coatings, optical, camera module designs, and camera system final integration

- Data that correlate lab and field observations

- After the integration of the DXOMARK flare metric in the IEEE 2020 standard, the metric is under discussion to be integrated in ISO/AWI 18844-2

Context

Lens flare is a frequent and often unwanted optical artifact in photography and imaging systems. It typically occurs under high-luminance conditions, such as direct sunlight, strong artificial lighting, or active infrared (IR) illumination. Flare originates from internal reflections and scattering within the lens assembly and optical stack, including lens elements, coatings, filters, and protective covers. Depending on the optical design and illumination conditions, it can manifest as veiling glare, ghosting patterns, halos, or petal-shaped artifacts.

These flare artifacts significantly degrade image quality by reducing contrast, masking fine details, and lowering effective dynamic range. In advanced camera systems, flare can also interfere with downstream image processing pipelines and perception algorithms, making it a critical performance factor for applications such as automotive vision, surveillance, and mobile imaging.

At DXOMARK, addressing flare measurement posed a fundamental challenge: how to objectively characterize an inherently optical phenomenon while remaining independent of non-linear image processing applied by the device under test. To achieve this, DXOMARK designed a robust flare evaluation protocol operating directly on RAW image data. This approach ensures that measurements reflect the true optical behavior of the camera module rather than software-dependent tone mapping, HDR fusion, or flare suppression algorithms.

Examples of flare effect on real-life scene and lab simulation

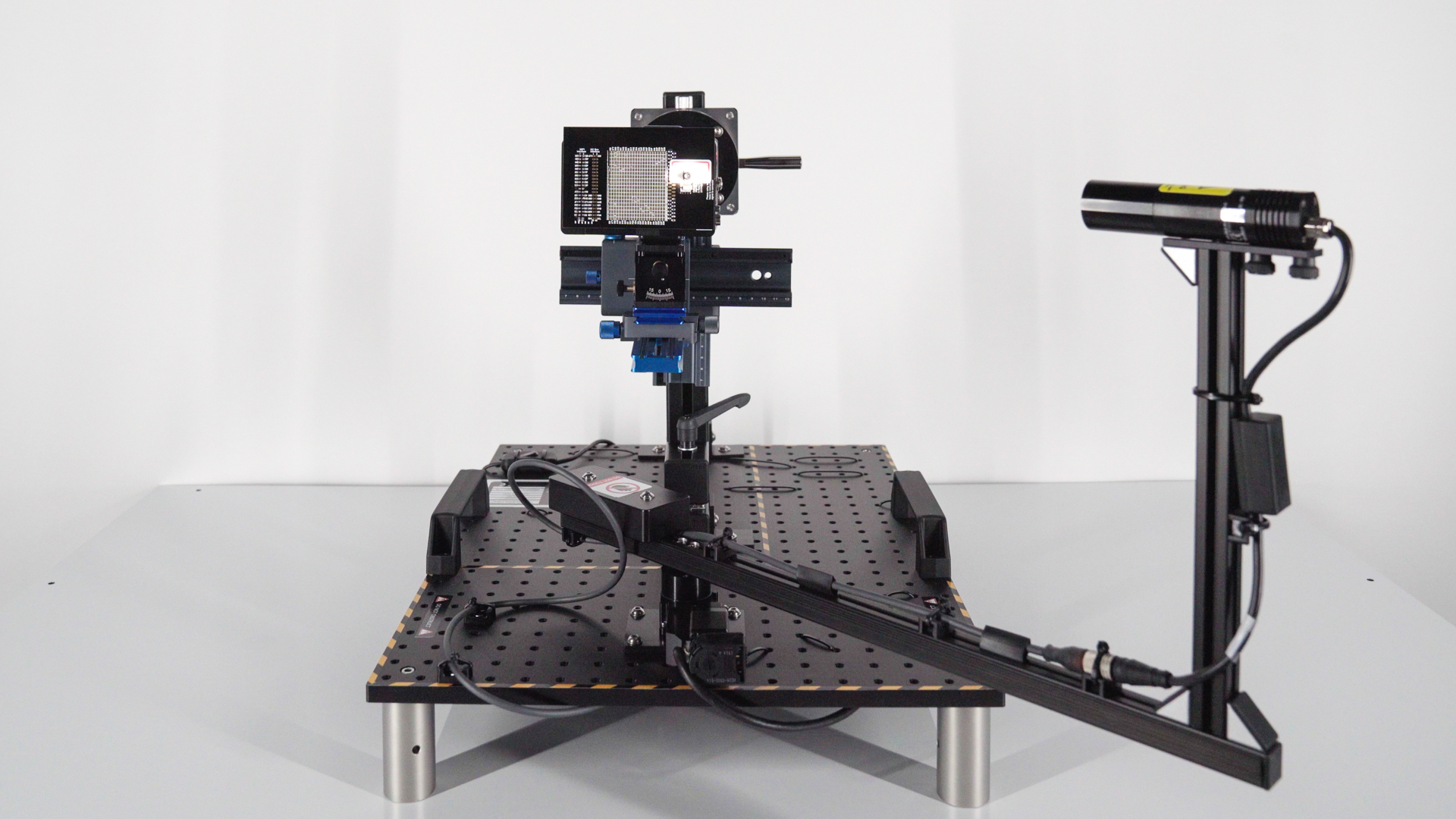

Capture of the COMPASS Bench used for flare evaluation with an Automotive demobard under test

To support this methodology, the development started the fundamental flare use case: a strong light source, such as the sun, illuminating the camera module at varying angles. To reproduce this scenario, DXOMARK designed a fully automated and compact hardware setup in which a high-intensity collimated light source, simulating the sun, rotates precisely around the device under test. This configuration enables controlled illumination across a wide range of incidence angles.

The measurement principle relies on strict optical isolation. Only light originating from the calibrated source, along with all resulting flare artifacts, reaches the sensor. By masking the direct image of the light source itself, the captured signal contains only flare-induced light. Through straightforward radiometric calculations, DXOMARK can then extract the flare intensity directly from the RAW capture.

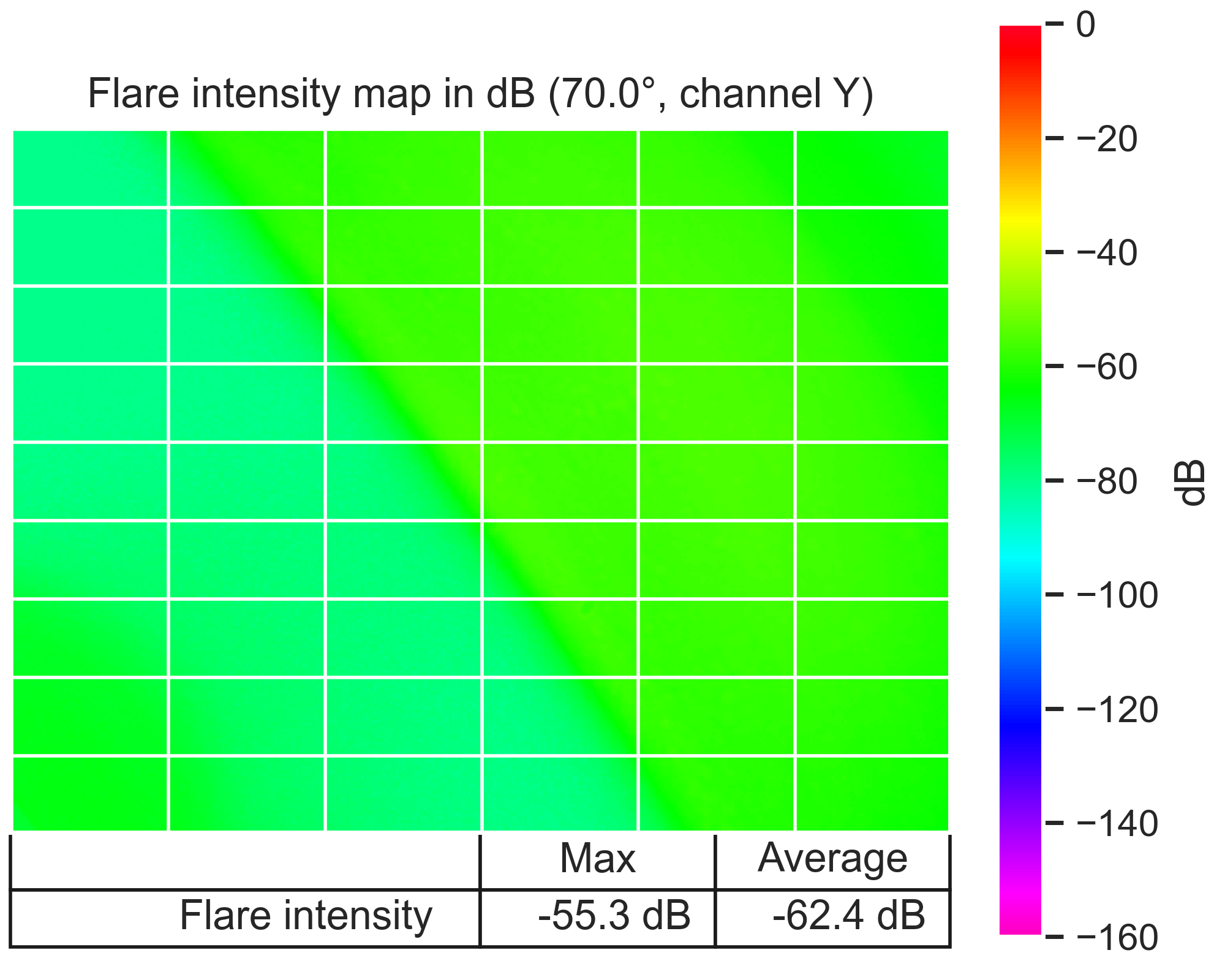

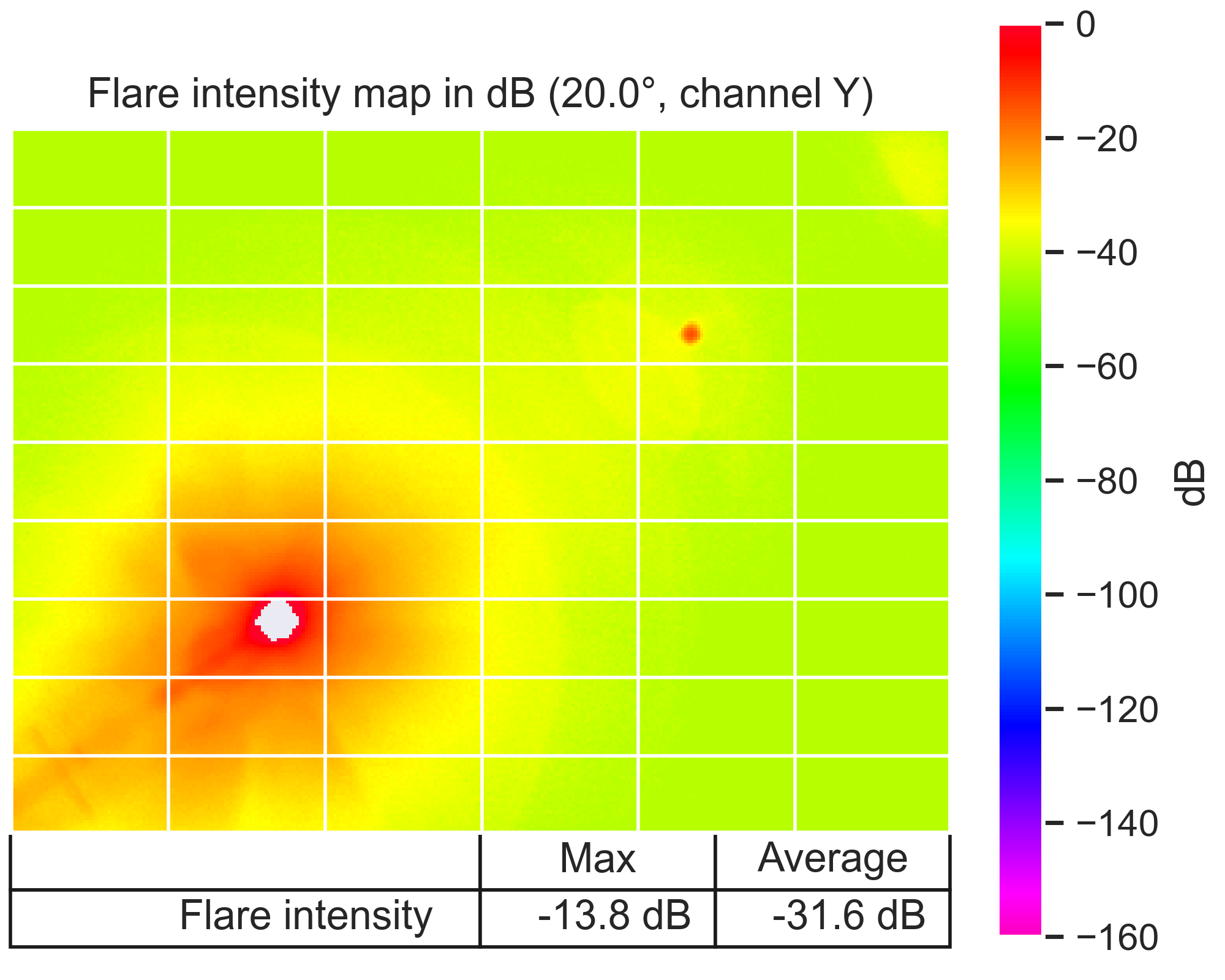

This technique enables both quantitative measurement and spatial mapping of flare across the entire image sensor. The methodology measures flare as both a figure of merit and a figure of demerit:

- Flare intensity, representing the amount of unwanted light reaching the sensor

- Flare attenuation, representing the optical system’s ability to suppress stray light

Both metrics are expressed in decibels (dB) and are numerically related. From the resulting flare maps, DXOMARK extracts two core key performance indicators:

- Average flare: a global indicator describing the overall flare distribution across the image

- Worst-case flare: a peak indicator highlighting the most critical flare behavior in localized areas of the image

Extract taken from the results provided by Analyzer software – Two flare intensity maps

Extract taken from the results provided by Analyzer software – Flare intensity max & average across source positions

Together, these KPIs provide engineers with a clear and actionable understanding of both global and localized flare performance. Then, from these two parameters measured at each angle, a global flare intensity profile across angles can be generated, providing a clear high-level view of the flare performance at a glance.

This methodology introduces a new level of accuracy and repeatability in flare assessment. It supports evaluation across a wide range of camera modules and applications, including smartphone cameras, automotive imaging systems (with windshield integration directly on the test bench), and surveillance cameras operating in visible and NIR domains.

The resulting data provide valuable insight for OEMs, optical designers, and image quality engineers seeking to optimize lens architecture, optical coatings, windshield positioning, infrared filter performance, and overall system integration. Importantly, DXOMARK’s flare measurement protocol has been integrated into the IEEE 2020-2024 image quality standards, which serve as a key reference framework for automotive imaging performance evaluation.

Learn more about IEEE P2020Why They Came to Us

- Built for engineering accuracy, not just consumer benchmarking

- Objective metrics tied to physical models and emerging standards

- Integration with Analyzer for end-to-end lab workflows

- Expertise from decades of imaging science ensures tests reflect real-world performance

DSLR & Mirrorless

DSLR & Mirrorless  3D Camera

3D Camera  Drone & Action camera

Drone & Action camera